Task 1: Learn and Compare Terraform Providers

✨ Objective: Learn about Terraform providers and compare their features across different cloud platforms.

📚 Steps:

- Spend time learning about Terraform providers and their significance in managing resources across various cloud platforms or infrastructure services.

Terraform Providers:

Terraform providers act as an abstraction layer between Terraform and the various services it supports. They encapsulate the set of APIs that each specific service exposes and translate that into a format that Terraform can understand and work with. For example, there are providers for AWS, Google Cloud Platform, Azure, and many other cloud or on-premises services.

Why Terraform is an essential tool for developers and is considered a superior tool.

It’s open-source: Terraform has many contributors who regularly build add-ons for the platform. So, regardless of the platform you’re using, you will easily find support, extensions, and plugins. The open-source environment also encourages new benefits and improvements, so the tool is constantly and rapidly evolving.

It’s platform-agnostic: Platform-agnostic means that the product is not limited to one platform or operating system. In Terraform’s case, it means you can use it with any cloud service provider, whereas with most other IaC tools, you are limited to a single platform.

It provisions an immutable infrastructure: Most other Infrastructure as Code tools generate a mutable infrastructure, meaning it changes to accommodate things like new storage servers or middleware upgrades. Unfortunately, mutable infrastructures are susceptible to configuration drift. Configuration drift occurs when the actual provisioning of various servers or other infrastructure elements "drift" away from the original configuration under the weight of accumulated changes. In Terraform's case, the infrastructure is immutable, meaning that the current configuration is replaced with a new one that factors in the changes, then the infrastructure is reprovisioned. As a bonus, the previous configurations can be saved as older versions if you need to perform a rollback, much like how you can restore a laptop's configuration to an earlier saved version.

- Compare the features and supported resources for each cloud platform's Terraform provider to gain a better understanding of their capabilities.

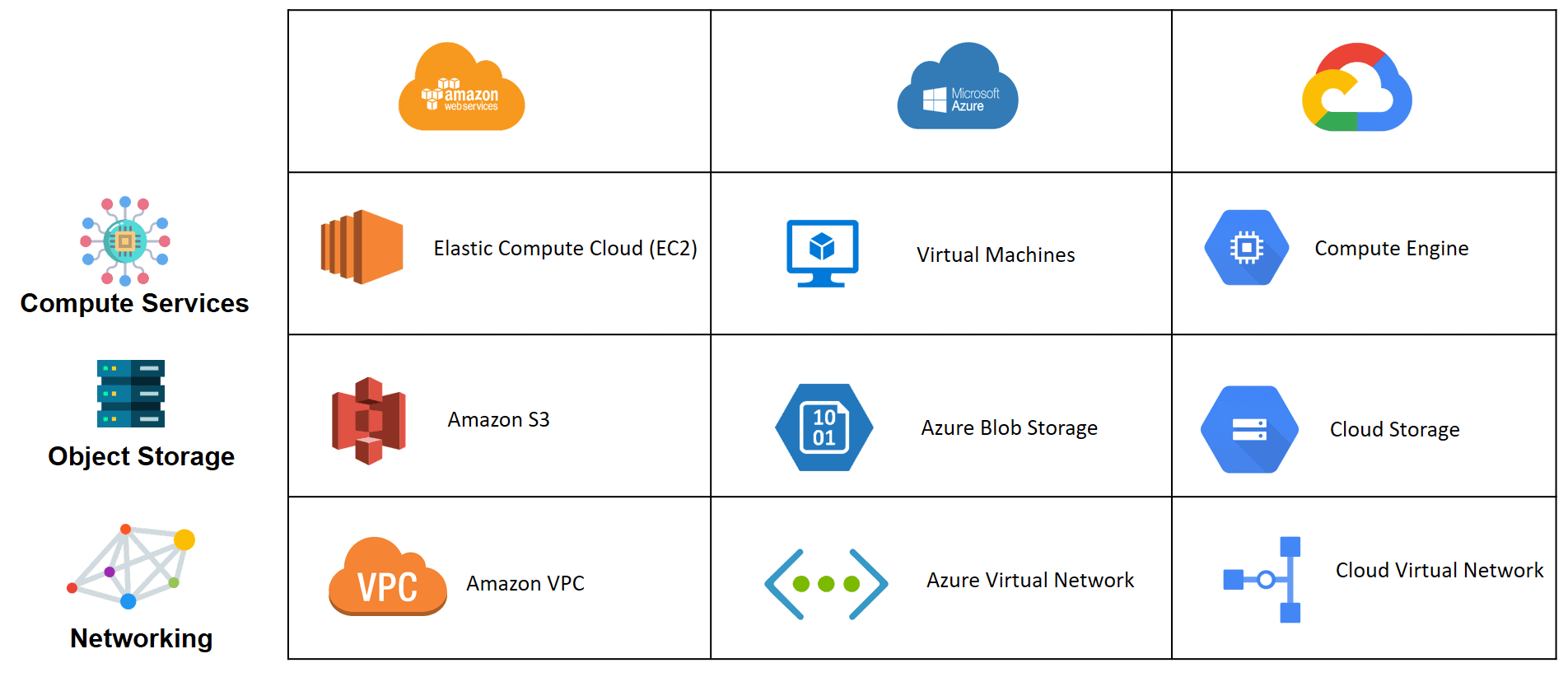

We will distinguish the difference between all the 3 major Cloud provider

AWS vs Azure vs GCP

| AWS | AZURE | GCP |

| Azure Started services in Year 2010 | Azure Started services in Year 2010 | GCP Launched in the year 2008 |

| Supports a wide range of AWS services and features, including EC2 instances, S3 buckets, RDS databases, VPCs, and IAM roles. | Covers a broad array of Azure services, including virtual machines, storage accounts, SQL databases, Kubernetes clusters, and IoT Hub. | Key GCP services, including Compute Engine VMs, Cloud Storage, Bigtable, Kubernetes Engine, and Pub/Sub. |

| Large and Complex scale offerings of services that can potentially manipulate. | Low-Quality Support | Quite costly support fee of about $150 per month for the silver class, which is the most basic of services |

| AWS allows users to integrate Services easily Amazon EC2, Amazon S3, Beanstalk etc. | AWS allows users to integrate Services easily Azure VM, Azure App Service, SQL databases etc | GCP allows users to integrate services Compute Engine, Cloud Storage, Cloud SQL etc. |

| In AWS, if you have to manage a package, you need to integrate external software or third-party software like Artifactory. | Azure has a package manager tool called Azure Artifacts to manage the packages like Nuget, Maven, etc. | Artifact Registry is a single place for your organisation to manage container images and language packages (such as Maven and npm) |

| For common workloads, Amazon EC2 provides general-purpose virtual machines (VMs), compute-optimized types for applications requiring high-performance processing, and memory-optimized types for programs that profit from lots of memory, like programs that process data in memory. | Similar general-purpose VMs and compute and memory-optimized VMs are available with Azure to compute, much as the others. There are VMs that are GPU-type optimized for accelerators. | Along with computing optimization, which offers high performance per CPU core, GCP Compute Engine also supports general-purpose VMs. |

Similar to AWS, there is a shared core or burstable VM option, as well as memory optimization and accelerated virtual machines. A storage-optimize option is not available on GCP as of the time of publication. |

Task 2: Provider Configuration and Authentication

✨ Objective: Explore provider configuration and set up authentication for each provider.

📚 Steps:

- Explore provider configuration and authentication mechanisms in Terraform.

AWS as a provider

To work with AWS, we will use AWS provider from the HashiCorp namespace and any version in or above 2.70 series.

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 2.70"

}

}

}

provider "aws" {

region = "us-east-1"

}

Azure as a Provider

To use Azure with Terraform, you’ll need the Azure provider, known as azurerm. You will need to authenticate using a Service Principal with a Client Secret, or by using the Azure CLI.

Here’s an example with Service Principal and Client Secret:

provider "azurerm" {

features {}

subscription_id = "your_subscription_id"

client_id = "your_client_id"

client_secret = "your_client_secret"

tenant_id = "your_tenant_id"

}

GCP as a Provider

To work with GCP in Terraform, you will use the Google provider. The provider configuration for GCP typically requires the project ID and credentials in JSON format.

Here’s an example:

provider "google" {

project = "your_gcp_project_id"

credentials = file("path_to_your_service_account_json_file")

}

The file function is used to load the JSON credentials file that you’ve downloaded from GCP.

- Set up authentication for each provider on your local machine to establish the necessary credentials for interaction with the respective cloud platforms.

- AWS

Access and Secret Keys: You can configure AWS access and secret keys to authenticate with AWS services. These keys can be obtained from the AWS IAM.

export AWS_ACCESS_KEY_ID=your-access-key-id

export AWS_SECRET_ACCESS_KEY=your-secret-access-key

- Azure

Service Principal: To authenticate with Azure, you can create a service principal and use its credentials. Use the Azure CLI to create a service principal:

az ad sp create-for-rbac --name ServicePrincipalName

This command will output a JSON object with the required credentials. Store them securely.

export ARM_CLIENT_ID=your-client-id

export ARM_CLIENT_SECRET=your-client-secret

export ARM_SUBSCRIPTION_ID=your-subscription-id

export ARM_TENANT_ID=your-tenant-id

- GCP

Service Account JSON Key: To authenticate with GCP, you'll need a service account JSON key. Create a service account in the GCP Console and download the JSON key file.

export GOOGLE_APPLICATION_CREDENTIALS=/path/to/your/key.json

Task 3: Practice Using Providers

✨ Objective: Gain hands-on experience using Terraform providers for your chosen cloud platform.

📚 Steps:

Choose a cloud platform (AWS, Azure, Google Cloud, or others) as your target provider for this task.

Create a Terraform configuration file named main. tf and configure the chosen provider within it.

Authenticate with the chosen cloud platform using the appropriate authentication method (e.g. access keys, service principals, or application default credentials).

Deploy a simple resource using the chosen provider. For example, if using AWS, you could provision a Virtual Private Cloud (VPC), Subnet Group, Route Table, Internet Gateway, or a virtual machine.

🔄 Experiment with updating the resource configuration in your

main.tffile and apply the changes using Terraform. Observe how Terraform intelligently manages the resource changes.Once you are done experimenting, use the

terraform destroycommand to clean up and remove the created resources.

We will create a terraform to configure an EC2 instance in AWS.

#main.tf

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 3.0"

}

provider "aws" {

region = "us-east-1"

}

resource "aws_ec2" "myinstance"{

instance_name = "my-instance"

instance_type = "t2.micro"

ami_id = "ami-0123456789"

aws_region = "us-east-1"

tags = {

Name = "myinstance"

}

}

You can configure AWS access and secret keys to authenticate with AWS services. These keys can be obtained from the AWS IAM.

export AWS_ACCESS_KEY_ID=your-access-key-id

export AWS_SECRET_ACCESS_KEY=your-secret-access-key

We will create a VPC resource:

resource "aws_vpc" "example" {

cidr_block = "10.0.0.0/16"

enable_dns_support = true

enable_dns_hostnames = true

}

resource "aws_internet_gateway" "example" {

vpc_id = aws_vpc.example.id

}

We will then initialize to scan the .tf files in the current directory

terraform init

To preview the changes that Terraform will make, you can run the below command:

terraform plan

We can then execute the files and automation will take place using the apply command.

terraform apply

To experiment we can tweak the file to see how the terraform manages the resource changes:

resource "aws_vpc" "example" {

cidr_block = "10.0.0.0/16"

enable_dns_support = true

enable_dns_hostnames = true

}

To destroy we will use the destroy command.

terraform destroy

Hope you like my post. Don't forget to like, comment, and share.